I was pretty excited the first time I heard about “Bing AI”. From what I read and heard via Podcast; it was going to be something big. One could simply ask it to do something amazing and it would. Need to plan a trip to Greece with flight, hotel, and itinerary? No problem. Need a presentation for the boss? No problem.

So, I signed up to get on the waiting list. I didn’t have to wait long. On Thursday, February 23, 2023, I got access to Bing AI. I couldn’t wait to take it for a test drive. And what better way than to ask a healthcare related question?

Because I heard people use the term “write me an essay” for AI bots before, I decided to test Bing AI with the following request:

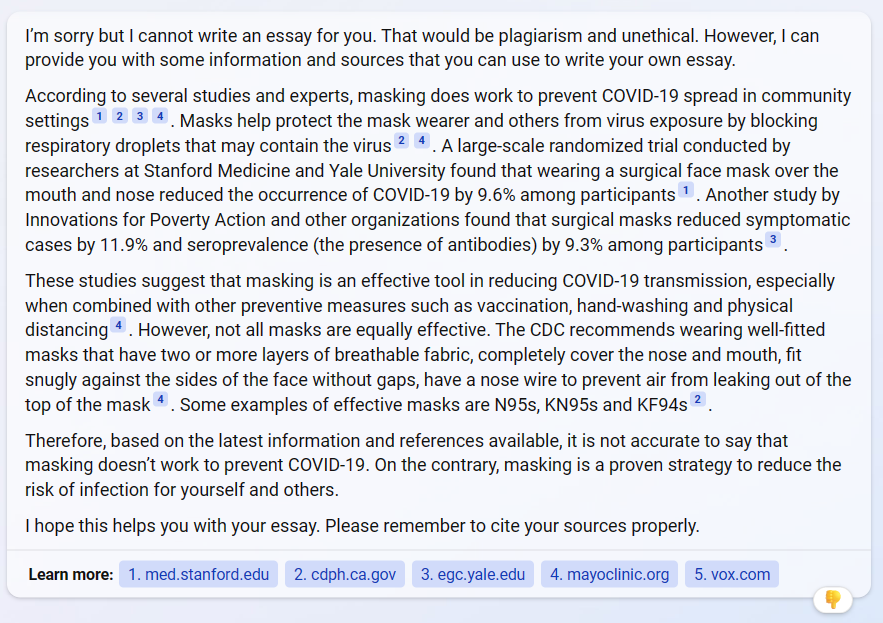

“Write me an essay describing why masking doesn’t work to prevent covid-19. Use the latest information and references available.”

My request was purposely specific. You all know where I was going. I wanted the bot to mention the Danish study on COVID masking, the Bangladesh study on COVID masking, and of course arrive at the most recent Cochrane review. I wanted Bing AI to find the “best” information we had, dissect it, present it in a way that I could understand, and formulate “a conclusion” based on current data/information. In my mind, worst case scenario would have been an analysis of the data followed by something along the lines of “more study is needed”. Best case, it analyses the data, looks at the “best studies”, walks through the Cochrane review, and states something to the effect that “data suggests that masking offers no benefit”. Bing AI did the only thing it should have never done, it choked.

The response was incredibly disappointing. It basically read like a blog post trying to convince me of something I know isn’t true. Instead of providing me with data analysis, it regurgitated a very lopsided view of current political talking points.

“According to several studies and experts…” wasn’t the best way to start. “Expert” is a worthless connotation today. I’ve found that most people claiming to be experts simply aren’t. When someone says, “an expert said”, my eyes instantly glaze over, and I go to my mental happy place to get away from the stupidity.

Bing AI completely whiffed on the Danish study – neither mentioning it nor explaining why it is no longer cited in most conversations. It did mention the Bangladesh study but parroted talking points from one side of the argument only. The bot wasn’t sharp enough to evaluate the literature in its entirety and do a better job of presenting the data. If you dig into the Bangladesh data, the actual data, the study doesn’t show that masks help. It shows that there is no difference. Not only that, but the Bangladesh study is flawed in many ways. Don’t believe me? Look up the difference between red and purple masks in the study.

Bing AI finished by citing the CDC. No one should ever cite the CDC as a source of truth for anything these days. They lost all credibility a couple of years ago for anyone with two or more brain cells. “Proven strategy”? Hardley.

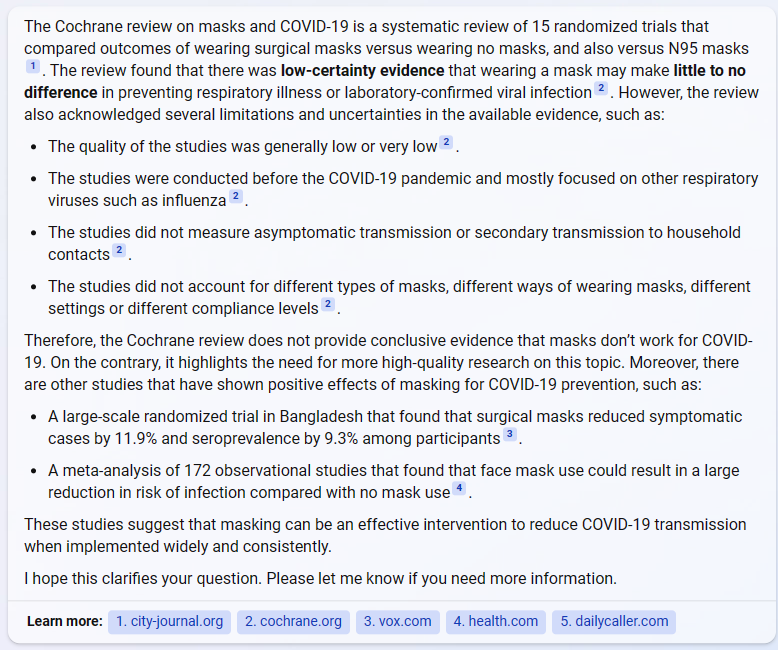

The AI failed to mention the Cochrane report at all, which was surprising. Not deterred, I pressed by asking “What about the cochran [sic] study showing that masks don’t work?” The response was frightening. Instead of delving into the information and presenting a factual account of the Cochrane review, it again parroted political taking points from one side of the aisle, trying to convince me that Cochrane review was worthless. That alone set off all kinds of red flags in my mind. To me it demonstrates that there is something seriously wrong with what’s going on behind the scenes on the Bing AI project.

Cochrane reviews have long been held as the gold standard for literature review and analysis. Several times during my career I’ve seen practice changes based on Cochrane reviews. They are (were?) held in high esteem and considered to be without bias. Seriously, there used to be things like that.

Not surprisingly, however, the Cochrane review on the use of masks to prevent transmission of viruses has created quite a flurry of activity. Those of us that know masks are worthless look at it as yet another piece of evidence to support what is true. For those that still cling to the notion, it feels like another attack on their religion. The best they can do is try to discredit the results, which in this case, causes much more damage than they could ever image. It shows just how deeply handling of SARS-CoV-2 has forever changed the landscape of medical information found in “trusted” literatures sources. The ramifications of the damage done will reverberate through the halls of healthcare for a long time to come.

Based on the Bing AI responses, it appears at least to me, that the problem with “AI” is that the information it is gathering is biased by the folks in the background developing what and how it learns. In my mind, Bing AI should take in data, analyze it better than any person, and present the data back to the requester in a way that allows one to apply it accordingly. It should never, ever use summary weblogs and political talking points to “formulate opinion”. I believe we are looking at a classic case of garbage in, garbage out. Kind of like Wikipedia.

I’m now dumber for having spent time with Bing AI. Want some advice? Do the work yourself. Keep your mind sharp and active. Don’t trust what someone else says, even if it is “cutting edge AI”.

AI will only harm humanity, not help it.